About CheckMate

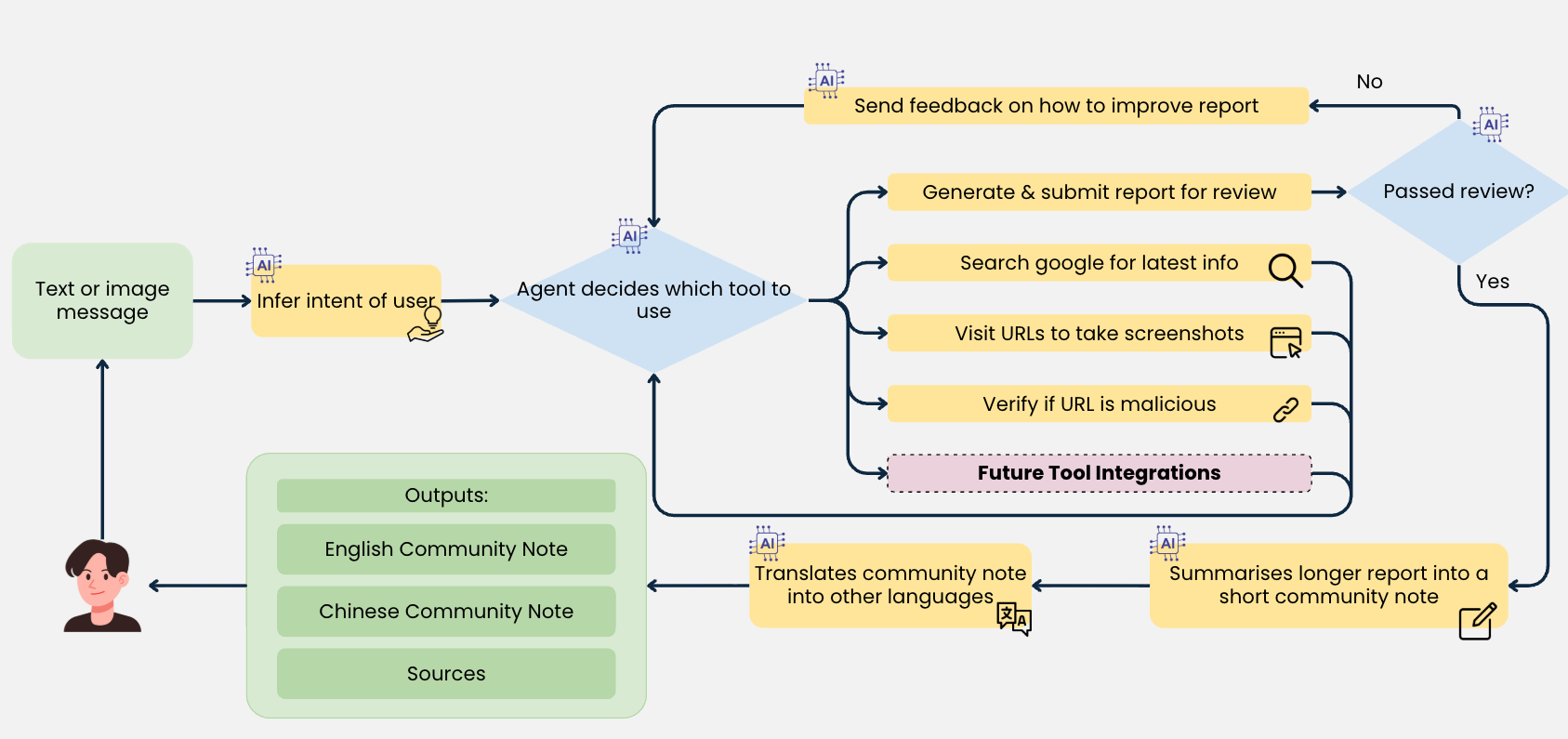

CheckMate is a service that allows anyone to send in dubious content to our WhatsApp number, and uses a combination of AI and crowdsourcing to check it on their behalf. This is powered by an AI agent that can perform web searches, visit webpages, and use malicious URL-scanning services. It chooses any combination of these “tools” until it is confident in its judgement, before drafting a report that is summarised into a “community note” for end-user consumption.

CheckMate's AI agent workflow

CheckMate's AI agent workflow

Building Our AI Agent

Prior to the current AI-based system, CheckMate had already been operating for slightly over a year, relying on crowdsourcing to deliver checks at scale. From this, we had built up a substantial dataset of verified submissions. Using 200 of these past examples, we assembled a test set to evaluate our first AI agent. The initial results were promising, but with clear areas for improvement.

The Challenge: Improving Accuracy at Scale

To make our AI agent better, we zoomed in on examples where its outputs fell short; some outright wrong, others poorly nuanced.

We wanted to understand why this happened.

This was easier said than done. Our AI agent comprised multiple intermediate steps and tool calls. A poor response could stem from a bad interpretation of user intent, a mis-worded search, an inaccessible webpage, or simple hallucination.

Yet, all we could see at a glance was the agent’s final output.

Determining the root cause meant deep-diving into application logs, a task not for the faint-hearted.

Improving the system beyond the first version was both inefficient and time-consuming. It became clear we needed a better way to observe and debug our AI workflows.

Adopting Langfuse

When we searched for an LLM observability solution, Langfuse checked all the boxes. What further sweetened the deal was Langfuse’s discounts for non-profits, which allowed us to get started on the cloud-hosted version without self-hosting or stretching our budget. We now rely heavily on Langfuse’s features to monitor, improve, and manage our AI agent.

How We Use Langfuse

Dashboards and Tracing

Langfuse’s dashboards and well-thought-out tracing data model gave us visibility into the inner workings of our AI agent, allowing us to iterate and improve at a much faster rate.

Later, as we moved into production, these same tools helped us quickly triage and address issues that might otherwise have slipped under the radar.

Prompt Management

Our mainly volunteer team was small, and technical talent was thin on the ground.

Langfuse’s prompt management allowed us to decouple prompts from code, letting non-technical volunteers contribute to improving prompts directly. This boosted iteration speed and lowered our error rate significantly within just a few weeks – enough for us to confidently move the system into production.

Langfuse in Production

Using Langfuse in production has been invaluable.

We can now detect emerging patterns that might have gone unnoticed. For instance, we spotted a new trend where scammers’ phishing sites showed one version of a webpage to our crawler and another to real users, confusing our AI agent. Langfuse helped us identify this quickly, and we were able to adjust our agent to counter this new tactic.

Moving Forward

Looking ahead, we plan to deepen our use of Langfuse even further.

CheckMate already has a core group of volunteers rating and labelling user submissions, and we intend to use Langfuse’s dataset feature to better manage this human evaluation pipeline.

As disinformation spreaders and scammers continue to evolve their tactics, we’re confident that our AI + crowdsourcing system, coupled with the observability Langfuse provides, will enable us to keep up, and continue keeping our users safe and informed.